The Ultimate Guide on Speech Recognition API for You

Recently, I got a task to make a Voice-Controlled Web Application. Although I was never familiar with such things and their working.

So, I started doing my homework in the field of Speech Recognition. It was all overwhelming in the beginning but I found some useful resources on the Internet. I got a blog on code burst by Kai Wedekind, and after reading his blog, more of the doubt on Speech Recognition was cleared.

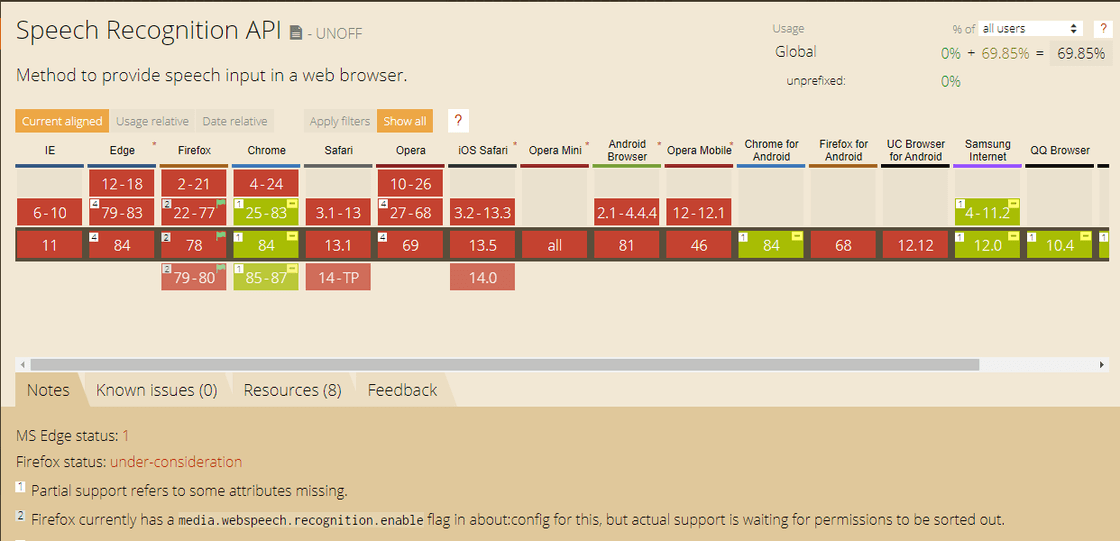

Before, getting started to code my very first voice-enabled web application. I have done a little bit of research on Speech Recognition. There are hundreds of APIs available for voice recognition like Google Speech, IBM Watson, and many more but our modern browser is already loaded with built-in Web Speech API.

Source: https://caniuse.com/#search=Speech

Web Speech API is an experimental API available in Google Chrome and Mozilla Firefox. If ever made any AJAX call in javascript, this is going to be very simple for you.

Let's Start first by checking the support of Web Speech API in our browser(recommend using Google Chrome).

Basically, after invoking the above function you can check that your browser is ready for further development.

Still with me, means you have a modern browser.

Now create a new speech recognition object.

let recognition = new window.SpeechRecognition();This recognition object has many properties, methods, and event handlers. You can read the API documentation here.

We will talk following the recognition.onresult event handlers which come out as the response from the API. It will ask for permission to access the microphone, and a browser's dialog will appear. (of course, allow it)

Now, Whatever you speak from the microphone will appear on the console.log()

recognition.onresult = function() {

const transcript = Array.from(e.results)

.map(result=>result[0])

.map(result=>result.transcript)

.join('');

console.log('You Said: ',transcript);

};

Important Properties

Now, from the above snippet result will be logged after the end of the speech end. There are various properties available so that you can stream transcripts as you want. Let's talk about a few of them recognition.interimResults = true (by default it's false). This will allow you to log the transcript while you are speaking.

You will notice after the speech ends recognition system stops. So you can make it continuous listening by activating the recognition.continuous = true Now it's always ready to understand your speech. If the transcript does not appear that much correct you can collect a bunch of alternative transcripts too, by setting up the number of alternatives you want recognition.maxAlternatives = 2 (by default it's 1).

Handling Accents during Speech Recognition

If you are developing your app in a different language other than English, you can improve the result by changing the recognition.lang = "hi-IN" ( hi-Hindi IN-India). By default, it's "en-US".

Here's my Git Repository: https://github.com/imshivamgupta/speechrecognitionapi.git

APIs have been shown to increase business earnings by facilitating technology connectivity. You can also create and integrate the necessary API for your company with our software development company.

Feel free to contact us if you want to get a Voice-Controlled Web Application.